Explainable AI: Unlock the Black Box in AI

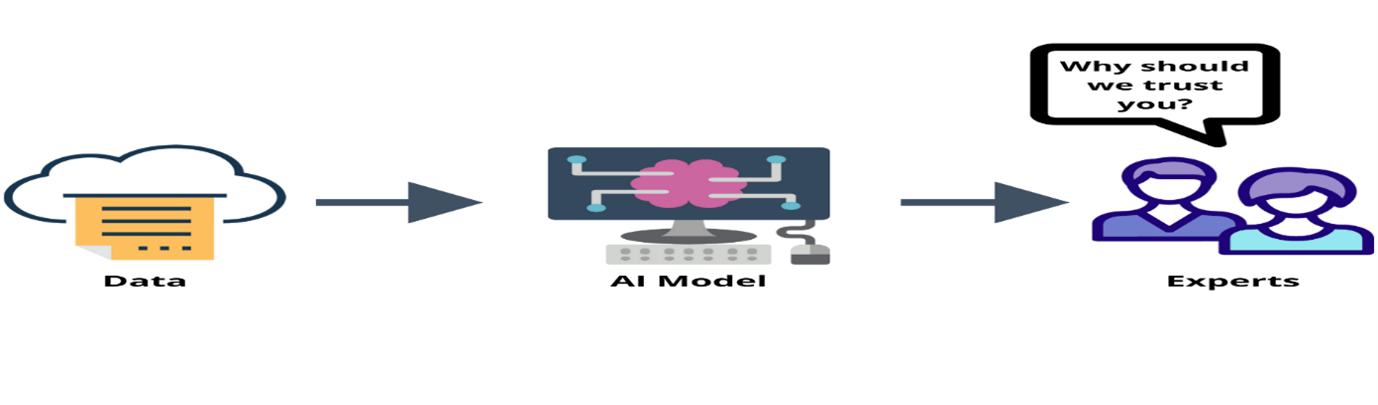

Explainable AI(XAI) is an umbrella term that consists of a set of tools, frameworks and algorithms to understand and interpret Artificial Intelligence Model behaviour. It is the process of explaining and comprehending important findings of an algorithm’s working which can be easily understood by human experts. It is also known as Interpretable AI (IAI) or Transparent AI (TAI) because human can interpret the decision or predictions made by AI. It also helps in improving the fairness, transparency, and accountability of the AI systems and enhancing trust between machines and humans. In short, Explainable AI is used to describe an AI model that can be programmed to give the right explanation on how and why a certain prediction or result achieved.

Before Understanding XAI, let us compare with Black Box in Machine learning and understand the importance of Explainable AI.

Black Box

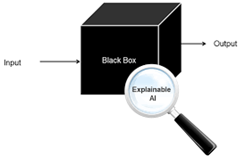

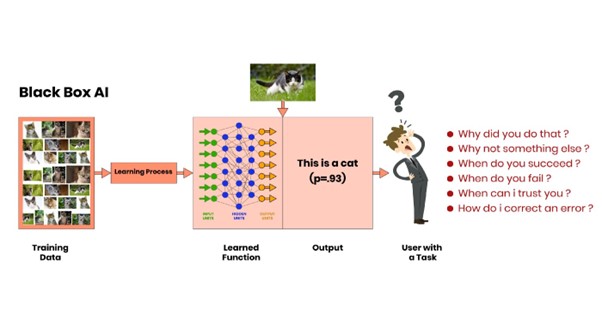

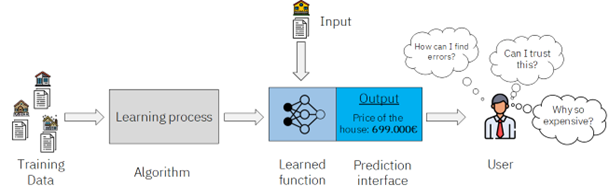

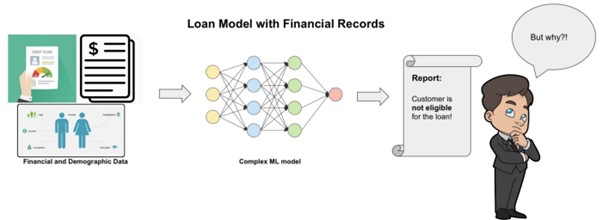

AI The decision-making process of a machine-learning model is often referred to as a black box. In term of Computing and Engineering, ‘Black Box’ is a device or system which allows us to see the input and output part but gives no idea of operations and its working. So, Black box AI refers to any AI based system or tools whose operations are not visible to the user or another interested party which means there will be no idea how the tool turned the input into the output. So, the important question is - Why does Black Box Problem exist and What are the reasons that cause AI black box Problem.

As we know that Deep learning is based on the concept of artificial neural networks that consist of three or more layers where each layer learns on its own by recognising patterns. In this phenomenon, we can’t identify how the nodes in layers are analysing the data and what the nodes have learnt. In fact, we only see the conclusion without knowing how the AI model has predicted the output. This kind of non-transparency is fuelling Black box AI problems; that’s why it is required to unlock this ‘black box’ and make sense of explainable AI.

Let us understand with an example. We are providing input of images of cats into deep learning model for training of data and our model has predicted it as a ‘Cat’. But what if we ask the model, why it has predicted a cat and not something else? Although, it is a simple question, but the model is not able to explain it. This is because the decisions or predictions are made without any explanation or justification.

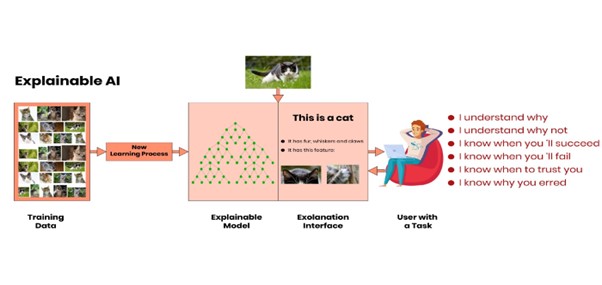

Explainable AI or Interpretable AI

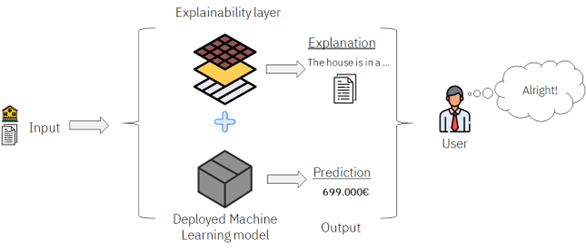

As the name suggest, Explainable AI(XAI) refers to the Artificial Intelligence systems or tools that generate predictions or results which human can easily interpret and explain. XAI aims to unbox how black box choices of AI systems are produced so that AI will be transparent in its operations and human users can easily trust the decisions.

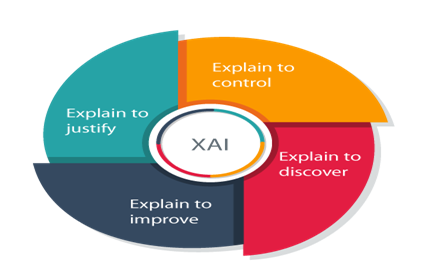

With the growth of AI Technology, some major concerns that Artificial Intelligence faces today are trust , Privacy, Confidence, Safety and Acceptance. For example, if AI goes wrong, e.g. Self-driving or driverless car has an accident then who should be responsible or liable? People often struggle to trust the decisions and answers that AI-powered tools provide. So, the four most essential features of explainable AI model are:

- Clarity(Transparency)

- Ability to question

- Adaptable (Easy to understand)

- Fair (Lack of Bias)

With XAI, decision models will be more accurate, fair and transparent which will help organization to adopt responsible outlook towards AI development.

Real time Use Cases of Explainable AI

Explainable AI has its use cases in various domain. It has its use cases in medical, defence, Banking, Manufacturing, autonomous vehicles and finance etc.

Some of the real examples of use cases of Explainable AI are:

a) Price Prediction:

Take the case of real estate, where machine learning model helps in predicting prices of property. With XAI, it will be easy to provide local explanations. This allows us to figure out - Why the price of property is high?

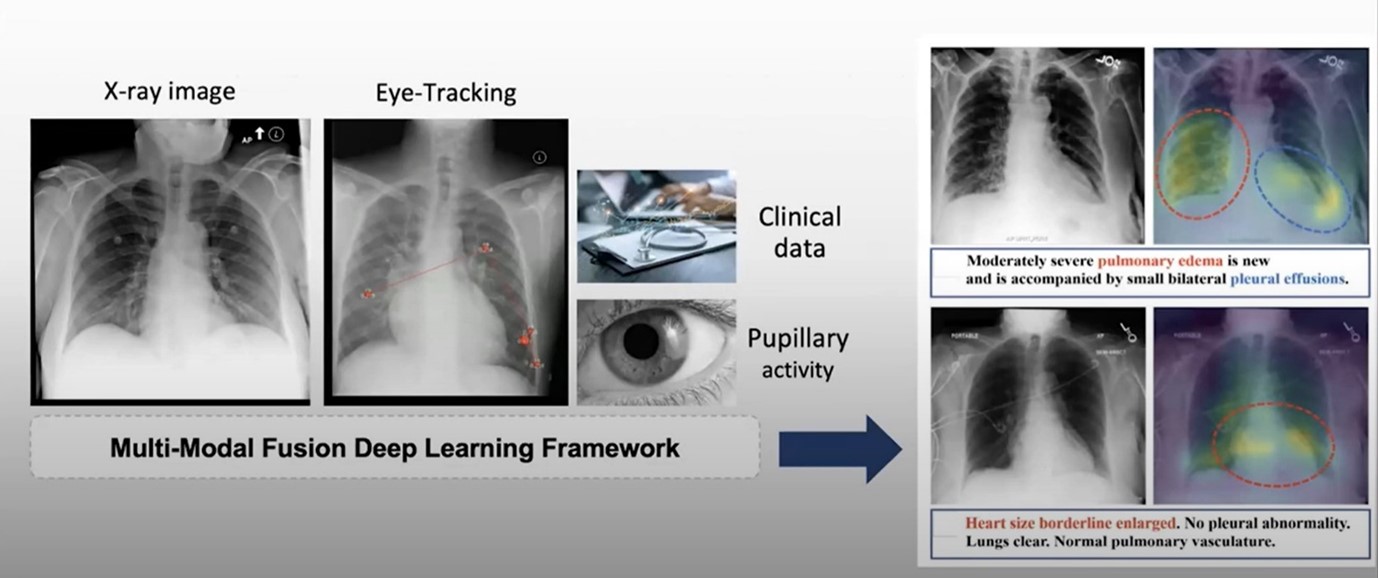

b) Healthcare

Now a days, healthcare devices or equipments are integrated with state-of-the-art technology that uses AI and Machine Learning to predict the occurrence of health conditions. In that case, Medical AI devices or applications need to be more transparent to increase the level of trust with doctors. XAI shows interpretable explanations and easy-to-understand representations that allow doctors, patients, and other stakeholders to understand the reason behind a recommendation.

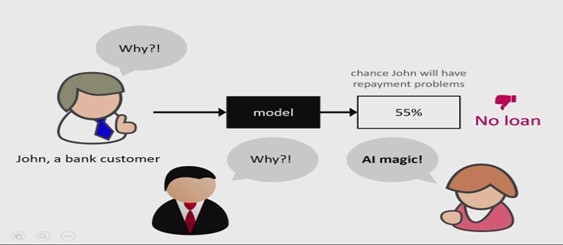

c) In Banking

The emerging field of explainable AI (or XAI) can help banks to smoothen customer experience, provide greater clarity and improve transparency & trust. It can help banks in areas related to fraud detection, Customer engagement and payment exception .

About the FutureSkills PRIME Programme

Future Skills PRIME Programme, is a joint venture between MEITY and NASSCOM. Various C-DAC/NIELIT centers are involved as the Lead Resource centers for institutionalizing blended learning mechanisms in specific emerging technologies. C-DAC Pune has been entrusted with the responsibility of a Lead Resource Centre for Artificial Intelligence Technology.

Courses run under FS Prime Programme are as below:

1. Bridge course

The course is specifically designed to create awareness of Data Science, Machine Learning, and Deep Learning Tools & techniques among participants so that they can recommend and apply these technologies in real life and at their workplaces. The course is meant for any graduates, entrepreneurs, interns, fresh recruits, IT professionals, non-IT professionals working in the IT industry, ex-employees, and faculties.

2. Training of Government officials (GOT)

Under this program Government Officials will be trained on emerging technologies of AI, which will help them to learn about cutting-edge technologies and upskill to make their work done differently like creating new methods for documentation.

3. Training of Trainers (TTT)

Provides an overview of Artificial Intelligence, principles, and approaches with which faculty can enhance their knowledge in the area of AI, Machine Learning, Deep Learning, NLP, Computer Vision, and its application.